The Ethical Implications Artificial intelligence (AI) is rapidly transforming education, offering unprecedented opportunities to personalize learning and improve outcomes. However, this technological revolution brings a complex web of ethical considerations. From algorithmic bias perpetuating existing inequalities to concerns about student data privacy and the evolving role of teachers, the integration of AI in education demands careful scrutiny and proactive mitigation strategies. The potential benefits are immense, but so are the risks if we fail to address the ethical challenges head-on.

This exploration delves into the multifaceted ethical implications of AI in education, examining how biases embedded in algorithms can disadvantage certain student populations, the crucial need for robust data protection measures, and the vital role of teacher professional development in navigating this new landscape. We will also analyze the impact on student agency, accessibility, and the overall fairness and equity of AI-powered learning systems.

The goal is to foster a responsible and ethical approach to AI integration, ensuring that technology serves to enhance, not hinder, the educational experience for all learners.

Algorithmic Bias and Fairness in Educational AI

The integration of Artificial Intelligence (AI) into education holds immense promise, offering personalized learning experiences and efficient administrative tools. However, the potential for algorithmic bias to exacerbate existing inequalities presents a significant ethical challenge. AI systems, trained on data reflecting societal biases, can perpetuate and even amplify these biases, leading to unfair and discriminatory outcomes for certain student populations.

Understanding and mitigating these biases is crucial for ensuring equitable access to quality education.

Bias in AI Algorithms and its Educational Impact

AI algorithms, at their core, learn patterns from the data they are trained on. If this data reflects existing societal biases – for instance, underrepresentation of certain demographics in high-achieving student groups or biased teacher evaluations – the algorithm will likely learn and replicate these biases. This can manifest in several ways: AI-powered tutoring systems might provide less challenging material to students from underrepresented groups, while simultaneously offering more advanced content to students from privileged backgrounds.

Similarly, AI-driven assessment tools might unfairly penalize students from certain linguistic or cultural backgrounds, leading to inaccurate evaluations of their capabilities. These biases can create a self-perpetuating cycle, further marginalizing already disadvantaged students and widening the achievement gap.

Privacy and Data Security in Educational AI

The integration of artificial intelligence (AI) into educational platforms offers immense potential for personalized learning and improved educational outcomes. However, this progress comes with significant ethical concerns, particularly regarding the privacy and security of student data. The vast amounts of information collected—including learning styles, performance metrics, and even emotional responses—present a complex landscape of potential risks and responsibilities. Balancing the benefits of AI-driven education with the fundamental right to student privacy requires careful consideration and robust safeguards.The ethical implications of collecting and using student data in AI-powered educational platforms are multifaceted.

AI algorithms require substantial data to function effectively, often involving sensitive personal information. This raises concerns about potential misuse, unauthorized access, and the creation of discriminatory profiles. For instance, an AI system trained on biased data could perpetuate existing inequalities, unfairly disadvantaging certain student groups based on factors like socioeconomic status or ethnicity. Moreover, the long-term storage and potential repurposing of student data raise questions about ownership and control.

Students, parents, and educators must have a clear understanding of how their data is being used and protected.

Best Practices for Protecting Student Privacy and Data Security

Protecting student privacy and data security requires a multi-pronged approach incorporating technical, administrative, and legal measures. Data minimization is crucial; only the data necessary for the AI system’s function should be collected. Strong encryption protocols should be implemented to safeguard data both in transit and at rest. Regular security audits and vulnerability assessments are vital to identify and address potential weaknesses.

Furthermore, robust access control mechanisms should ensure that only authorized personnel can access student data. Transparency is key; clear and accessible privacy policies should inform students, parents, and educators about data collection, usage, and retention practices. Finally, regular employee training on data security best practices is essential to maintain a culture of responsible data handling.

Legal and Regulatory Frameworks Governing Student Data Use

The legal and regulatory landscape surrounding student data in AI educational tools is evolving rapidly. Laws like the Family Educational Rights and Privacy Act (FERPA) in the United States and the General Data Protection Regulation (GDPR) in Europe provide crucial frameworks for protecting student data. These regulations establish stringent requirements for data collection, consent, transparency, and data security.

They also grant individuals rights regarding access, correction, and deletion of their personal data. Compliance with these and other relevant regulations is paramount for developers and institutions deploying AI-powered educational systems. Failure to comply can result in significant penalties and reputational damage.

Checklist for Evaluating Data Privacy and Security Measures

A comprehensive checklist is essential for evaluating the data privacy and security measures of AI educational systems. This checklist should encompass:

- Data Minimization: Does the system collect only the necessary data?

- Data Encryption: Are appropriate encryption methods used for data in transit and at rest?

- Access Control: Are access controls implemented to restrict data access to authorized personnel?

- Data Retention Policies: Are clear data retention policies in place, specifying how long data is stored and how it is disposed of?

- Security Audits: Are regular security audits and vulnerability assessments conducted?

- Privacy Policy Transparency: Is the privacy policy clear, accessible, and understandable?

- Compliance with Regulations: Does the system comply with relevant data protection laws and regulations (e.g., FERPA, GDPR)?

- Data Breach Response Plan: Is a comprehensive data breach response plan in place?

- Parental/Guardian Consent: Is informed consent obtained from parents/guardians before collecting and using student data?

- Student Data Ownership and Control: Are mechanisms in place to allow students (or their guardians) to access, correct, or delete their data?

This checklist serves as a starting point for a thorough evaluation. The specific requirements will vary depending on the context and the specific AI system being used. However, a robust approach to data privacy and security is crucial to ensure the ethical and responsible use of AI in education.

AI’s Impact on Teacher Roles and Professional Development

The integration of Artificial Intelligence (AI) in education is rapidly transforming the landscape of teaching and learning. While concerns about job displacement are valid, a more nuanced perspective reveals that AI is poised not to replace teachers, but to augment their capabilities, freeing them to focus on the uniquely human aspects of education: fostering critical thinking, nurturing creativity, and building meaningful relationships with students.

This shift necessitates a re-evaluation of teacher roles and a robust plan for professional development to ensure educators are equipped to harness the power of AI effectively.AI’s influence on teaching roles manifests in several key ways. Firstly, AI tools automate many administrative tasks, such as grading, scheduling, and lesson planning. This frees up valuable teacher time for more impactful interactions with students.

Secondly, AI can provide personalized learning experiences, adapting to individual student needs and paces, a task traditionally demanding significant teacher effort. Finally, AI offers data-driven insights into student performance, allowing teachers to identify learning gaps and tailor their instruction more effectively. This transition requires teachers to adapt their roles from primarily content deliverers to facilitators of learning, curators of resources, and mentors guiding students’ personalized educational journeys.

Teacher Roles in AI-Enhanced Classrooms

The traditional model of teaching often involves a teacher delivering information to a homogenous group of students, assessing their understanding through standardized tests, and providing feedback in a generalized manner. AI-enhanced pedagogical approaches, however, offer a paradigm shift. AI systems can provide individualized learning pathways, adaptive assessments that adjust in real-time based on student performance, and personalized feedback tailored to specific learning needs.

Teachers, in this new model, become facilitators of learning, guiding students through their personalized journeys, providing emotional support, and fostering collaborative learning environments. They leverage AI tools to gain deeper insights into student learning, identify areas needing additional support, and adjust their instruction accordingly. The role shifts from primarily delivering content to facilitating, mentoring, and personalizing the learning experience.

For example, a teacher might use an AI-powered platform to identify students struggling with a particular concept, allowing for targeted interventions and personalized tutoring sessions.

Professional Development for AI Integration in Education

Effective integration of AI in education requires substantial investment in teacher professional development. A comprehensive plan should include several key components. Firstly, introductory workshops and online courses should familiarize teachers with the capabilities and limitations of various AI tools available. Secondly, practical training sessions should provide hands-on experience with integrating AI tools into lesson planning, assessment, and student feedback.

Thirdly, ongoing mentorship and peer support networks are crucial to address the challenges and share best practices amongst educators. Furthermore, the professional development should emphasize ethical considerations surrounding AI in education, including algorithmic bias and data privacy. This multifaceted approach ensures that teachers are not only technically proficient but also critically aware of the ethical implications of using AI in the classroom.

Successful integration hinges on a culture of continuous learning and adaptation within the teaching profession.

AI’s Support for Personalized Learning and Feedback

AI tools offer significant potential for personalizing learning and providing individualized feedback. AI-powered learning platforms can adapt to individual student learning styles, pacing, and strengths and weaknesses. For instance, an AI system might identify a student’s preference for visual learning and tailor the content accordingly, offering interactive simulations and diagrams instead of solely textual explanations. Furthermore, AI can analyze student work, providing immediate and specific feedback on areas needing improvement.

This allows teachers to focus on addressing specific learning gaps, providing more targeted support, and promoting deeper understanding. Imagine a scenario where an AI system analyzes a student’s essay, identifying grammatical errors, suggesting improvements in sentence structure, and highlighting areas where the argument could be strengthened. The teacher can then review the AI’s feedback and build upon it, offering personalized guidance and enriching the student’s learning experience.

This frees teachers from the time-consuming task of manual grading and allows them to engage in higher-level interactions with students, fostering critical thinking and creativity.

Accessibility and Inclusivity in AI-Powered Education

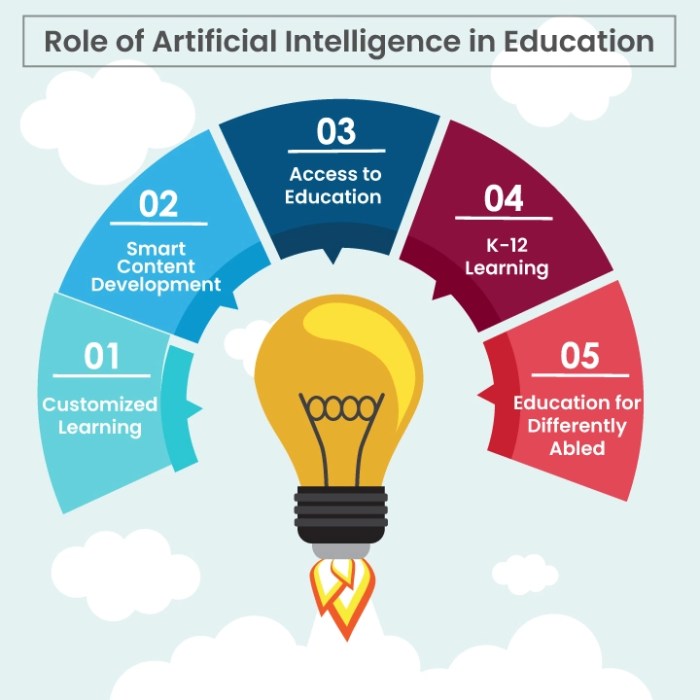

Artificial intelligence (AI) offers transformative potential for creating more inclusive and accessible learning environments. Its ability to personalize learning experiences, adapt to individual needs, and provide assistive technologies can significantly benefit students with diverse learning styles and disabilities. However, realizing this potential requires careful consideration of ethical implications and proactive strategies to ensure equitable access for all.AI-Powered Tools Enhancing Accessibility and InclusivityAI can personalize learning paths, providing tailored instruction and support to meet individual student needs.

For example, AI-powered tutoring systems can offer customized feedback and remediation, addressing specific learning gaps. Speech-to-text and text-to-speech technologies, powered by AI, enable students with visual or auditory impairments to engage with educational materials more effectively. Furthermore, AI algorithms can analyze student performance data to identify potential learning difficulties early on, allowing educators to intervene promptly and provide appropriate support.

This proactive approach is crucial for ensuring that all students have the opportunity to succeed. Consider, for instance, a dyslexic student struggling with reading comprehension. An AI-powered system could identify this struggle through analysis of their reading speed and accuracy, then adapt the learning materials to utilize alternative methods such as audio narration or visual aids.Challenges in Ensuring Equitable Access to AI Educational ToolsDespite the potential benefits, ensuring equitable access to AI-powered educational tools presents significant challenges.

Digital divides, both in terms of access to technology and reliable internet connectivity, disproportionately affect underserved communities. Furthermore, algorithmic bias in AI systems can perpetuate existing inequalities, potentially disadvantaging students from marginalized groups. The design and implementation of AI educational tools must prioritize fairness and avoid reinforcing existing biases. For example, an AI system trained on data primarily reflecting the experiences of a specific demographic might not accurately assess or support the learning needs of students from different backgrounds.

This underscores the critical need for diverse and representative datasets in the development of AI educational tools. Moreover, the lack of accessible design in the AI tools themselves can create barriers for students with disabilities. Interfaces must be designed to be usable with assistive technologies and accommodate diverse learning styles.Design of an Accessible AI-Powered Learning PlatformAn accessible AI-powered learning platform should prioritize inclusivity at every stage of its design and implementation.

This requires a multi-faceted approach, considering diverse learning styles, abilities, and technological access. The following table Artikels key features and accessibility considerations:

| Feature | Description | Accessibility Consideration | Implementation Strategy |

|---|---|---|---|

| Personalized Learning Paths | AI adapts content and pacing to individual student needs and learning styles. | Supports diverse learning styles (visual, auditory, kinesthetic); accommodates varying levels of cognitive ability. | Utilize multiple modalities (text, audio, video); offer adjustable difficulty levels; incorporate progress tracking and adaptive assessments. |

| Assistive Technologies Integration | Seamless integration with screen readers, text-to-speech, and speech-to-text software. | Compliance with WCAG guidelines; compatibility with various assistive technologies. | Employ open APIs and accessible design principles; conduct thorough usability testing with diverse users. |

| Multilingual Support | Content available in multiple languages to cater to diverse linguistic backgrounds. | Accurate translation; culturally sensitive content. | Utilize machine translation with human review; incorporate diverse cultural perspectives in content creation. |

| Universal Design Principles | Design principles that ensure usability for all users, regardless of ability. | Clear and concise instructions; intuitive navigation; adaptable interface. | Employ established universal design guidelines; conduct user testing with diverse participants. |

| Offline Functionality | Access to core learning materials even without internet connectivity. | Availability of offline content; consideration for users with limited or unreliable internet access. | Develop a robust offline mode; prioritize essential content for offline access. |

Accountability and Transparency in AI Educational Systems

The deployment of artificial intelligence (AI) in education holds immense promise, but its potential benefits are inextricably linked to the ethical considerations of accountability and transparency. Without robust mechanisms to ensure these principles, the risk of unintended consequences, bias amplification, and erosion of trust is significant. This section explores the crucial role of accountability and transparency in building responsible and equitable AI-powered educational systems.The importance of accountability and transparency stems from the inherent complexity of AI algorithms and their potential impact on student learning and well-being.

AI systems, particularly those employing machine learning, can be “black boxes,” making it difficult to understand how decisions are made. This lack of transparency can lead to unfair or discriminatory outcomes, eroding trust in the system and potentially exacerbating existing inequalities. Accountability mechanisms ensure that those responsible for developing and deploying these systems are held responsible for their actions and the impact of their creations.

Transparency, on the other hand, allows for scrutiny and understanding, promoting fairness and fostering trust.

Best Practices for Ensuring Transparency in AI Educational Algorithms and Data

Transparency in AI educational systems requires a multi-faceted approach. This includes providing clear documentation of the algorithms used, the data sets employed in training, and the decision-making processes involved. Furthermore, developers should employ explainable AI (XAI) techniques to make the reasoning behind AI’s recommendations more understandable. For example, if an AI system suggests a specific learning path for a student, the system should be able to explain the rationale behind that suggestion, making the process clear and understandable to educators, students, and parents.

Regular audits of AI systems, both internal and external, are also vital to ensure ongoing compliance with ethical standards and to identify and address any potential biases or flaws. Open-source development, where possible, can also enhance transparency by allowing for independent scrutiny of the code and algorithms.

Mechanisms for Holding Developers and Institutions Accountable

Establishing accountability requires a combination of technical, legal, and ethical frameworks. Clear guidelines and regulations are needed to define the responsibilities of developers, educational institutions, and other stakeholders involved in the development and deployment of AI in education. These guidelines should specify requirements for data privacy, algorithm transparency, bias mitigation, and mechanisms for redress in case of unfair or discriminatory outcomes.

Independent oversight bodies could be established to monitor compliance with these guidelines and investigate complaints. Furthermore, mechanisms for independent audits and impact assessments are crucial to ensure that AI systems are used responsibly and ethically. Legal frameworks could include provisions for liability in cases of harm caused by AI systems, incentivizing developers to prioritize ethical considerations.

Guidelines for Promoting Transparency and Accountability in AI-Powered Educational Tools

A comprehensive set of guidelines is necessary to promote responsible AI development and deployment in education. These guidelines should cover several key areas:

- Algorithm Documentation: Detailed documentation of the algorithms used, including their limitations and potential biases.

- Data Transparency: Clear description of the data sets used in training, including their sources, characteristics, and potential biases.

- Explainable AI (XAI): Implementation of XAI techniques to make the decision-making processes of AI systems understandable.

- Bias Mitigation Strategies: Detailed description of the strategies used to identify and mitigate biases in algorithms and data.

- Regular Audits and Impact Assessments: Implementation of regular audits and impact assessments to monitor the ethical implications of AI systems.

- Redress Mechanisms: Establishment of clear mechanisms for addressing complaints and rectifying unfair or discriminatory outcomes.

- User Education and Training: Providing educators, students, and parents with training and resources to understand and use AI-powered educational tools responsibly.

These guidelines, when implemented effectively, can contribute significantly to fostering trust and ensuring the ethical use of AI in education, maximizing its benefits while mitigating potential harms. Adherence to these principles is not merely a technical exercise; it is a fundamental commitment to fairness, equity, and responsible innovation in the field of education.

The Impact of AI on Student Agency and Learning Outcomes

The integration of Artificial Intelligence (AI) in education presents a complex interplay between technological advancement and pedagogical considerations. While AI offers immense potential to personalize learning and enhance educational outcomes, its impact on student agency – the capacity for self-determination and control over one’s learning – requires careful examination. The potential for both empowerment and disempowerment necessitates a nuanced understanding of AI’s role in shaping student autonomy, self-directed learning, and critical thinking skills.AI’s influence on student agency manifests in several ways.

Personalized learning platforms, driven by AI algorithms, can adapt to individual learning styles and paces, offering tailored content and feedback. This customization can foster a sense of ownership and control over the learning process, thereby enhancing student agency. Conversely, over-reliance on AI-driven systems that prescribe a rigid learning path can stifle exploration and limit students’ ability to make independent choices, potentially diminishing their agency.

The key lies in designing AI systems that support, rather than replace, human guidance and student initiative.

AI’s Enhancement of Student Autonomy and Self-Directed Learning

Adaptive learning platforms, powered by AI, analyze student performance and adjust the difficulty and pace of instruction accordingly. This personalized approach empowers students to learn at their own speed and focus on areas requiring more attention. For instance, a student struggling with a particular mathematical concept might receive additional practice problems and targeted explanations tailored to their specific misunderstanding. This individualized support fosters a sense of competence and control, thereby enhancing their self-directed learning capabilities.

Furthermore, AI-powered tools can provide students with access to vast amounts of information and resources, allowing them to explore topics of interest independently and pursue their own learning goals. This expands their learning horizons and encourages a sense of agency in shaping their educational journey. A well-designed AI system can act as a sophisticated tutor, providing scaffolding and support while simultaneously fostering independent learning.

AI’s Potential to Hinder Student Agency and Critical Thinking

While AI can enhance student agency, its potential to hinder it is equally significant. Over-reliance on AI-powered tools that provide ready-made answers can discourage critical thinking and problem-solving skills. Students may become overly dependent on the technology, failing to develop the ability to analyze information critically and form their own conclusions. For example, if an AI system consistently provides the correct answer without requiring students to engage in the process of arriving at the solution, it may stifle the development of crucial cognitive skills.

The absence of challenging questions and opportunities for independent thought can lead to a passive learning experience, hindering the development of agency. Moreover, the “black box” nature of some AI algorithms can obscure the decision-making process, reducing transparency and potentially limiting students’ understanding of the learning process itself.

Negative Consequences of Over-Reliance on AI-Powered Educational Tools

The over-reliance on AI-powered educational tools can lead to several negative consequences. A diminished capacity for critical thinking and problem-solving, as mentioned previously, is a major concern. Furthermore, excessive dependence on AI-driven feedback can hinder the development of self-assessment skills and metacognitive awareness – the ability to reflect on one’s own learning process. Students may become overly reliant on the system’s evaluation, neglecting the importance of self-reflection and self-regulation in their learning.

This can also lead to a decreased ability to cope with challenges and setbacks independently, as students may not develop the resilience and problem-solving skills necessary to navigate academic difficulties without the constant support of AI. Additionally, the potential for algorithmic bias in AI systems can perpetuate existing inequalities and disadvantage certain groups of students.

The Ideal Balance Between AI-Supported Learning and Human Interaction

The ideal integration of AI in education requires a careful balance between technology-supported learning and meaningful human interaction. AI should serve as a tool to enhance and personalize the learning experience, not to replace the crucial role of educators in fostering critical thinking, creativity, and social-emotional development. The focus should be on developing AI systems that support and augment the teacher’s role, providing them with valuable data and insights to better meet the individual needs of their students.

This collaborative approach ensures that AI is used responsibly and ethically, maximizing its benefits while mitigating its potential drawbacks. The human element remains indispensable in education, providing the emotional intelligence, nuanced understanding, and personalized support that AI currently lacks. The goal should be to create a synergistic relationship between AI and human educators, fostering a learning environment that is both personalized and engaging.

The ethical integration of AI in education requires a multi-pronged approach encompassing algorithmic fairness, robust data security, teacher empowerment, and inclusive design. Addressing algorithmic bias through diverse datasets and rigorous testing is paramount, alongside the establishment of clear legal and ethical guidelines for data usage. Furthermore, investing in teacher professional development and creating accessible learning platforms for diverse learners is critical.

Ultimately, the successful integration of AI hinges on a commitment to transparency, accountability, and a prioritization of human-centered design, ensuring that technology serves to amplify human potential and create a more equitable and effective educational system for all.

Question Bank

What are the potential long-term effects of AI on student creativity and critical thinking?

Over-reliance on AI tools could potentially hinder the development of independent problem-solving skills and critical thinking if students become overly dependent on AI-generated answers without engaging in deeper analysis or creative exploration. However, AI can also be used to foster creativity by providing new tools for exploration and personalized feedback that encourages innovative thinking.

How can we ensure that AI-powered educational tools are culturally sensitive and relevant to diverse student populations?

Culturally sensitive AI requires the development of algorithms trained on diverse datasets that reflect the experiences and perspectives of various cultural groups. This includes careful consideration of language, representation, and the potential for cultural biases in algorithms. Collaboration with educators and community members from diverse backgrounds is crucial to ensure cultural relevance and avoid perpetuating stereotypes.

What role will human educators play in an AI-enhanced classroom?

Human educators will remain essential, shifting from primarily delivering information to acting as facilitators, mentors, and guides. Their role will focus on fostering critical thinking, emotional intelligence, social-emotional learning, and personalized support that AI cannot fully replicate. They will curate AI tools, address ethical considerations, and ensure a balanced learning experience.

What are the potential economic implications of widespread AI adoption in education?

Widespread AI adoption could lead to both cost savings (e.g., through automation of administrative tasks) and increased costs (e.g., in developing and maintaining AI systems, providing teacher training). The long-term economic effects will depend on factors such as the efficiency gains from AI, the costs of implementation, and the impact on educational outcomes and workforce preparedness.

Read More: boostbizsolutions.net